Learning from single events is a crucial element of human perceptual reasoning. Yet the neural networks that govern one-shot perceptual learning (as the process is known) are poorly understood.

A $1.2 million grant from the W. M. Keck Foundation will enable NYU Langone Health researchers Biyu J. He, PhD, and Eric K. Oermann, MD, to explore those systems in unprecedented depth, using advanced imaging and computational techniques. The two hope that in the process the research will unveil new insights into the perceptual anomalies associated with many psychiatric illnesses.

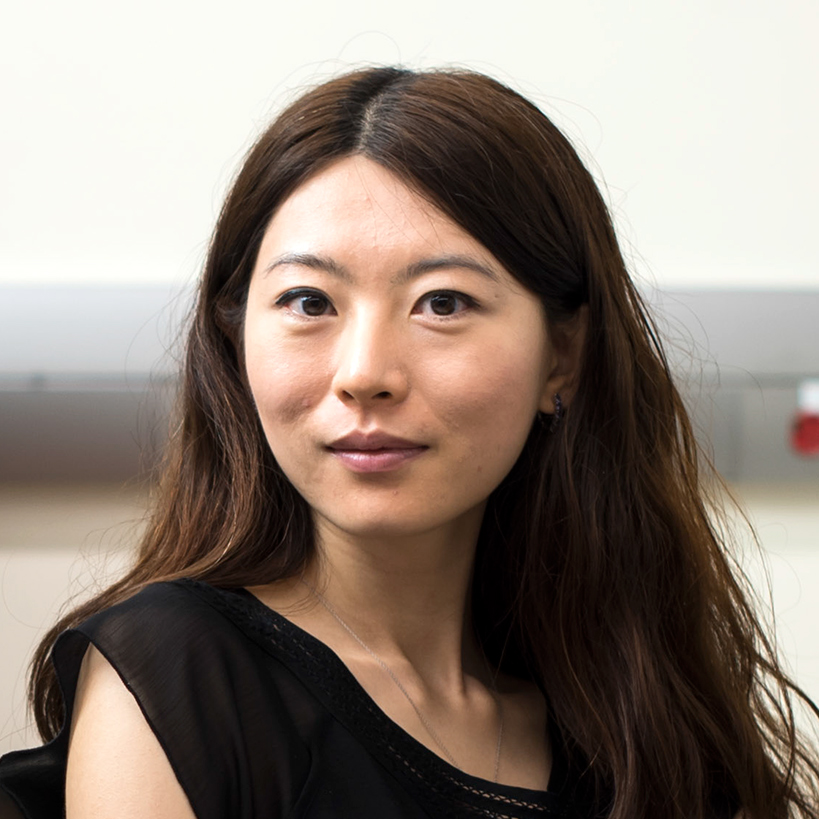

“The mechanisms thought to enable one-shot perceptual learning are also associated with a wide range of psychopathologies, including anxiety, post-traumatic stress disorder, and psychosis,” explains Dr. He, an assistant professor of neurology, neuroscience and physiology, and radiology. “Developing detailed models for how this type of learning works could help us develop better treatments.”

“The mechanisms thought to enable one-shot perceptual learning are also associated with a wide range of psychopathologies, including anxiety, post-traumatic stress disorder, and psychosis. Developing detailed models for how this type of learning works could help us develop better treatments for those disorders.”

Biyu J. He, PhD

This project also aims to solve a very different puzzle: how to improve perceptual learning in artificial intelligence (AI). “One-shot learning is one of the hardest problems in machine learning,” says Dr. Oermann, an assistant professor of neurosurgery and radiology. “By capturing the mechanisms behind this phenomenon in humans, we hope to develop more performant computational models.”

Building on Previous Work

A classic example of one-shot perceptual learning involves a so-called Mooney image—a photo of an object that has been degraded to a low-resolution, black-and-white image that is difficult to identify. In human trials, participants can typically recognize such an image after a single exposure to the original, intact photo. Once they’ve made that connection, moreover, they can remember it for days, months, or even longer.

Previous studies by Dr. He and colleagues have suggested involvement of the frontoparietal and default-mode networks in Mooney image disambiguation. “Our findings support the idea that these areas play a special role in leveraging prior experience to guide perceptual processing,” Dr. He observes. “They also support theories positing aberrant interactions between internal priors and sensory input in psychiatric illnesses, which are often associated with abnormal patterns of activity in those brain regions.”

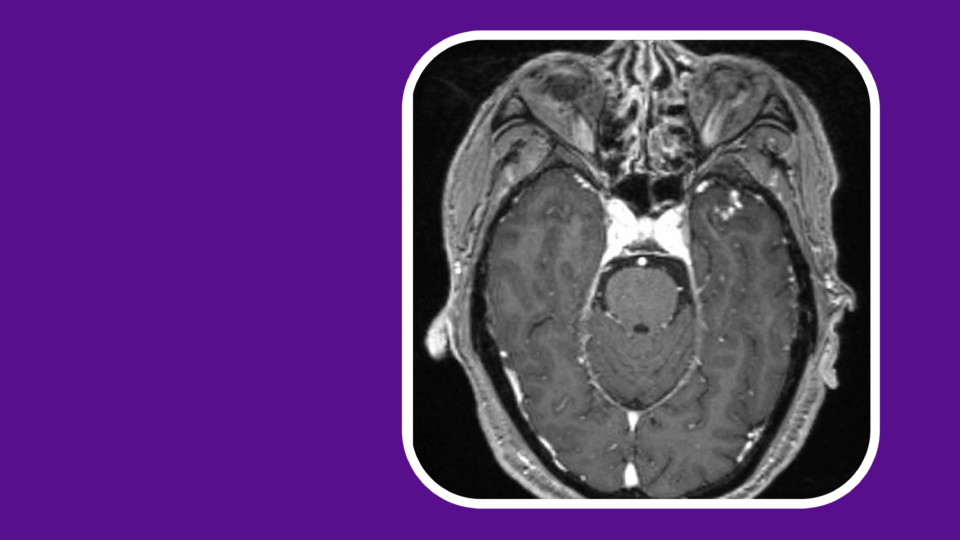

Another study by Dr. He and her collaborator, Larry Squire, PhD, at the University of California, San Diego, in which patients with hippocampal lesions successfully performed a Mooney image task, showed that one-shot perceptual learning—unlike one-shot declarative memory—is not mediated by the hippocampus. “This suggests that non-hippocampal neural pathways may also be involved in the perceptual dysfunctions in psychiatric disorders,” says Dr. He.

Forging New Paths

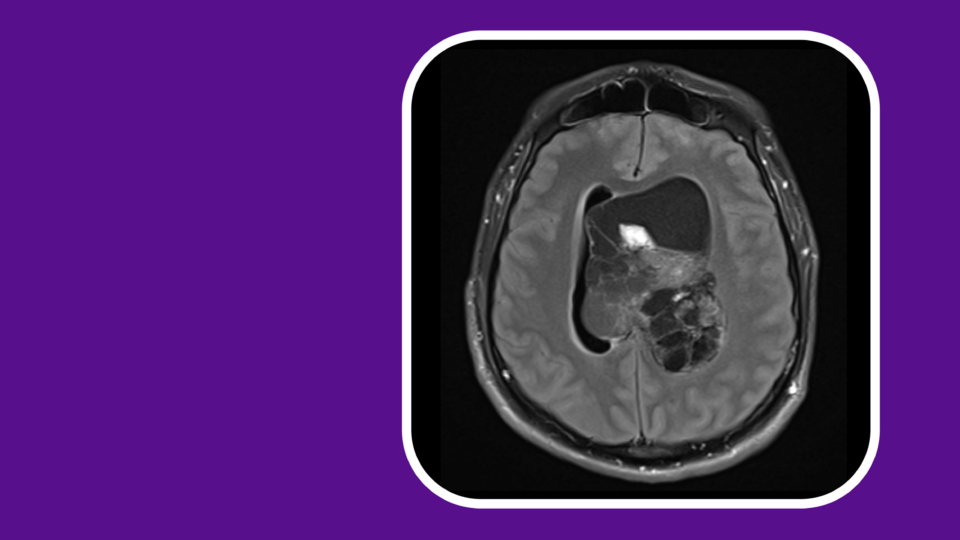

In the new study, Dr. He and Dr. Oermann will seek to identify the larger neural networks mediating one-shot perceptual learning by combining behavioral experiments with 7-Tesla functional MRI (fMRI) and magnetoencephalography (MEG) in healthy volunteers. They will also perform intracranial recordings in neurosurgical patients during learning and other tasks.

As they gather experimental data, Dr. Oermann will use the advanced machine learning capabilities available through his NYU Langone laboratory to build an AI counterpart to what they are learning about humans. These models will be continually tested and refined as the research continues.

“One-shot learning is one of the hardest problems in machine learning. By capturing the mechanisms behind this phenomenon in humans, we hope to develop more performant computational models.”

Eric K. Oermann, MD

“Our major goal is to have a better understanding of how the human brain performs one-shot learning,” says Dr. Oermann. “But we also hope the neuroscience will guide us toward developing biomimetic mechanisms for solving the problem of one-shot perceptual learning in machines. Without this type of research, AI could remain over-tailored to the problem of recognizing ambiguous images, without the full robustness of biological perceptual systems.”

Both researchers point out that more robust AI models for one-shot perceptual learning, in turn, could lead to enhanced therapies for neurological ills—for example, by developing communication devices for patients with locked-in syndrome, or robotic surgical systems for brain tumor resection.