For the world’s 39 million blind and 246 million low-vision individuals, impaired navigational ability negatively impacts income, physical and mental health, and quality of life. Advanced wearables can provide assistance superior to the traditional white cane, but many are prohibitively expensive. In addition, adapting devices for indoor use typically requires infrastructure—such as Wi-Fi or visual light communication (VLC) transmitters—that may be scarce in low- and middle-income countries.

Rusk Rehabilitation researchers at NYU Langone Health, in concert with partners in Thailand, are developing a platform designed to overcome these obstacles. Known as VIS4ION (Visually Impaired Smart Service System for Spatial Intelligence and Onboard Navigation), the platform consists of an on-person navigational and computing functional aid paired with microservices through the cloud.

The team, led by John-Ross Rizzo, MD, recently received funding from the NIH’s National Eye Institute and Fogarty International Center to begin testing the system at Ratchasuda College, a school for disabled students affiliated with Mahidol University in Bangkok.

Linking Inexpensive Hardware to Next-Generation Tech

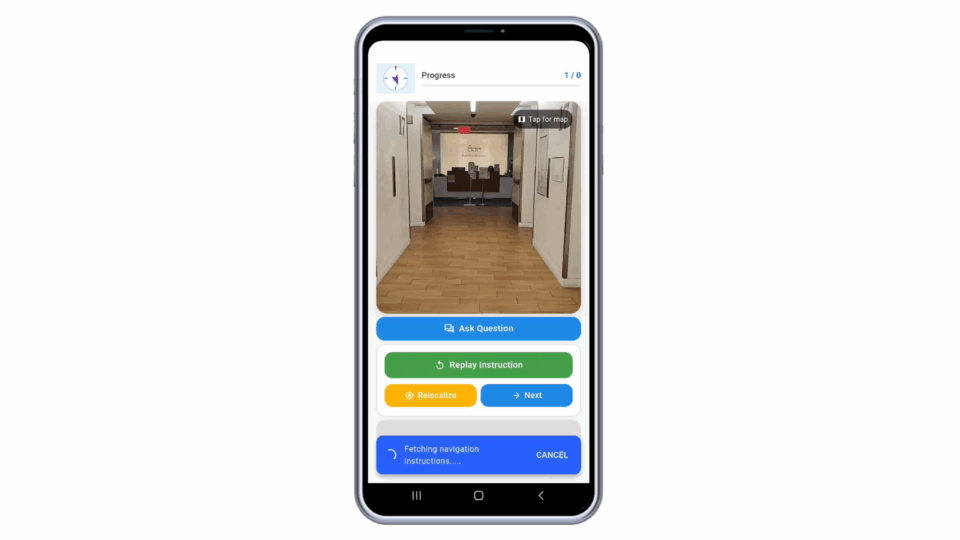

The VIS4ION platform provides real-time situational and obstacle awareness via a backpack fitted with five main components: 1) distance, ranging, and image sensors; 2) an embedded microcomputer; 3) a haptic interface via waist strap; 4) a headset with binaural bone conduction speakers and a noise-cancelling microphone; and 5) a GPS module.

“Unlike some other digital navigational aids for the visually impaired, ours does not need expensive 3D Lidar sensors or infrastructural beacons,” Dr. Rizzo explains. Instead, the platform leverages stereo cameras as its sensing foundation. Using 3D semantic mapping and localization technology, the system registers images to a database of maps recorded at centimeter scale. The wearer receives information through audible messages and vibrotactile prompts.

In areas with Wi-Fi or cellular service, the localization network utilizes cloud computing. When no connection is available, however, the system can operate fully on-board.

“Unlike some other digital navigation aids for the visually impaired, ours does not need expensive 3D Lidar sensors or infrastructural beacons.”

John-Ross Rizzo, MD

Another cost-saving feature is the platform’s use of images acquired via Google and through citizen science. Volunteers can record their wayfinding with mobile phones, uploading images and GPS coordinates to the mapping database. Participants in trials of VIS4ION will also help populate the database during navigation.

Refining the System in a Global Setting

In the first phase of the NIH-funded study, the researchers will implement semantic segmentation and image-query-based localization networks on the Ratchusada campus. They will deploy the augmented platform with visually impaired students, and assess for acceptability, appropriateness, and feasibility.

Pending success, the team will progress to the second phase—further enhancing the platform over an extended period, and selecting an additional urban area in Bangkok for generalizability testing.

“We anticipate a production cost of about half that for available systems.”

“Although initial costs of VIS4ION may be high, we anticipate a production cost of about half that for available systems,” Dr. Rizzo says. “We’re using an approach that leverages input from study participants for improved function and usability, aimed at ultimately benefitting visually impaired persons worldwide.”