For patients who have lost their ability to speak—due to a stroke, amyotrophic lateral sclerosis, or other conditions that disconnect the brain’s speech signaling pathways—artificial intelligence and brain–computer interface systems have opened the door to neural speech prostheses. However, few such systems aim to mimic an individual’s unique voice, so that most yield generic speech that can sound robotic.

Aided by machine learning algorithms, a research group at NYU Langone Health has developed a unique speech decoder and synthesizer system that produces natural-sounding speech closely matching individuals’ voices. The team’s latest study—one of the largest in its field—tested the approach in 48 patients, showing how the technique can accurately re-create a broad range of speech.

Here’s more information about how the system works and how it could lead to far more individualized neural speech prostheses.

How does the new vocal reconstruction system work?

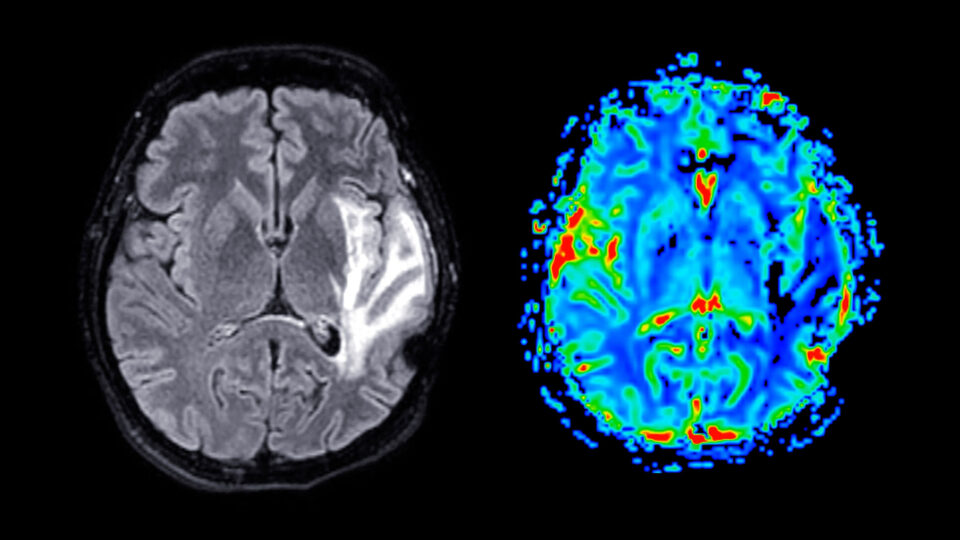

Speech can be mapped and synthesized from neural electrocorticography (ECoG) signals to a time–frequency representation of speech, or a spectrogram, says neuroscientist Adeen Flinker, PhD. He and colleagues first mapped out a set of 18 speech parameters that represent how the human voice changes in frequency, pitch, and other characteristics over time. A deep neural network can learn and re-create that complex mapping.

To capture an individual’s unique speech, the researchers trained a model on a prerecording of each patient’s voice. A technique called an autoencoder was used to constrain the 18 parameters representing that voice. Machine learning equations then helped combine the parameters into a more accurate re-creation of the person’s speech patterns.

How do researchers gather the necessary data to decode speech?

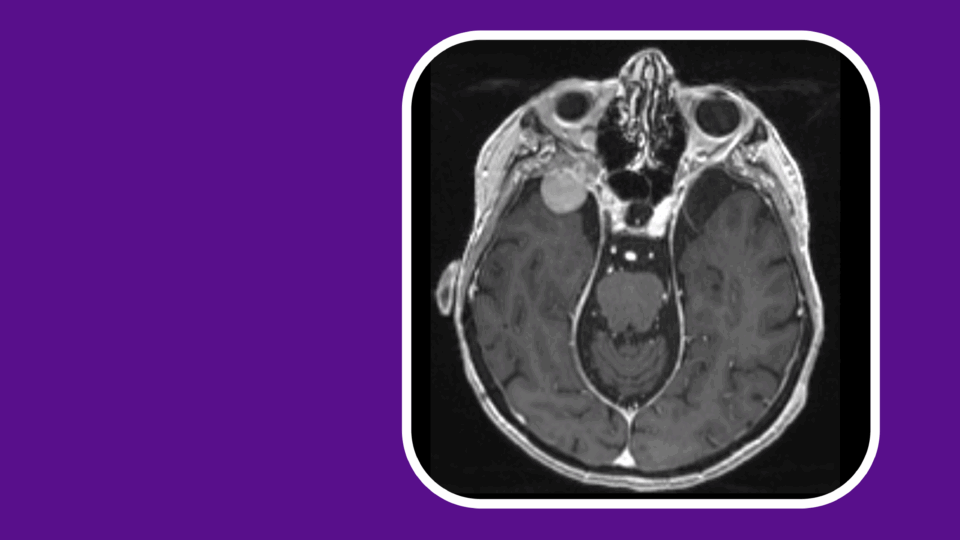

Researchers have learned to decode speech from the brain signals of patients undergoing evaluation for epilepsy surgery. Electrodes implanted for presurgical evaluation also provide the necessary ECoG recordings for vocal reconstruction. Data from electroencephalography (EEG) electrodes that monitor activity from outside the skull are not sufficient, because the neural signals are distorted after traveling through bone and other tissue.

What makes this approach unique?

“Instead of generating a generic voice or robotic voice, we first learn the patient’s voice in a machine learning manner,” Dr. Flinker says. That approach yields a more realistic reproduction of the patient’s speech.

Additionally, unlike similar studies, which have included only a handful of patients, the team’s study represents the largest cohort to date in which a neural speech decoder for unique speech has been applied, proving that the approach is both reproducible and scalable. “We really wanted to push the envelope and see to what degree we can scale this up,” he says.

If the approach pans out, it could lead to clinical trials of brain–computer interface technologies that reproduce an individual’s own voice. In addition, the researchers have shared an open-source neuro-decoding pipeline to bolster collaboration and enable replication of the group’s results.

What other new information did the study reveal about speech signaling?

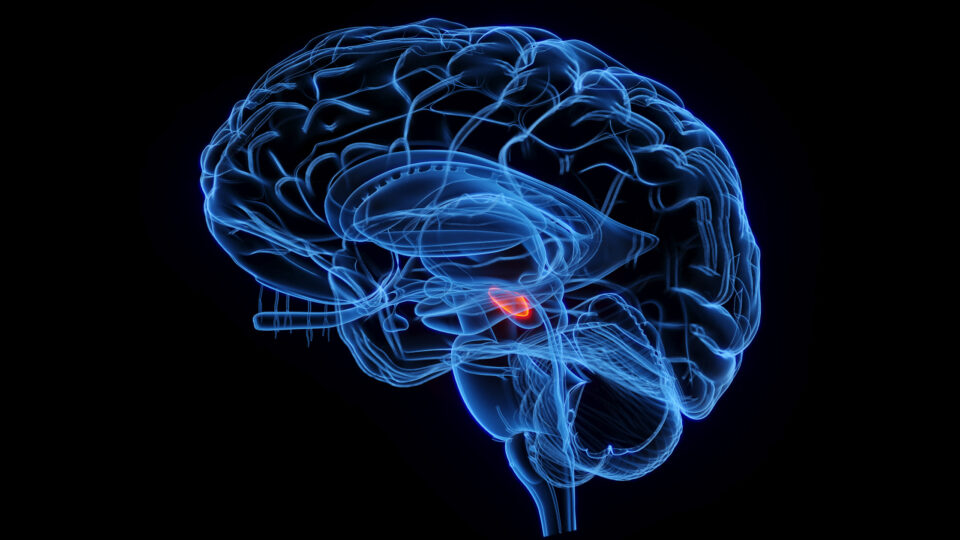

Language signals are typically associated with the brain’s left hemisphere, but the researchers used available data from the right hemisphere of 16 patients to produce “robust decoding” for them as well, Dr. Flinker says. “That’s a very exciting finding that hasn’t been shown before, especially not on this scale.”

If neural signals from the right hemisphere prove sufficient for decoding speech, he says, it could open up the prosthetic technology to patients with aphasia due to left-hemisphere damage. “The fact that we can decode from the right hemisphere is the first line of evidence that we can perhaps restore and synthesize speech based on intact right hemisphere signals and not the damaged left hemisphere,” Dr. Flinker says.

What’s next for this work?

The next immediate step, Dr. Flinker says, is to develop approaches that do not require a prerecording of a patient’s voice, while also expanding the system’s vocabulary to include more words and sentences. The team will then need to assess the efficacy of the technology on clinical trial participants with implanted electrodes who are unable to speak. Another significant challenge lies in designing a clinical trial for patients with aphasia to determine how effectively the technology functions when the left hemisphere is compromised.