Central retinal vein occlusion (CRVO) can result in varying levels of vision compromise ranging from minor to severe vision loss. While there are excellent treatments for CRVO and visual recovery can improve, the ability to predict the extent of improvement is limited.

New findings from investigators at NYU Langone Health indicate a machine learning algorithm could predict visual and anatomic outcomes in patients with macular edema following CRVO (MEfCRVO) being treated with intravitreal aflibercept injection. Additionally, the algorithm predicts dosing frequency with high accuracy.

“Most patients ask, ‘How many injections will I need?’ What we’re trying to do is provide some prognostic value when the patient shows up to see the physician,” says vitreoretinal surgeon Yasha S. Modi, MD, who was part of the team that helped to create the model.

“Most patients ask, ‘How many injections will I need?’ What we’re trying to do is provide some prognostic value when the patient shows up to see the physician.”

Yasha S. Modi, MD

While more research is needed before the tool can be introduced in the clinic, the new findings validate the predictive model and serve as a proof of concept for second- and third-generation models.

“With central retinal vein occlusion, there are hugely disparate outcomes. The best predictor of long-term vision is the visual acuity you present with,” Dr. Modi says. “We have all this sophisticated imaging and other relevant data, but we don’t know if those are relevant in a predictive way.”

Predicting Outcomes

The researchers used datasets from the COPERNICUS and GALILEO clinical trials, which tested intravitreal aflibercept injection for MEfCRVO. Both trials mandated six monthly injections; patients were then moved into a pro re nata (PRN; as needed) window and followed for at least one year.

“Our goal was to see if we could predict what would happen to individuals after they reached the PRN threshold,” says Dr. Modi.

The algorithm evaluated best-corrected visual acuity (BCVA) and central subfield thickness (CST) over a range of time points to predict outcomes at the one-year mark. BCVA at weeks 20 and 24 (the visits before switching from monthly to PRN treatment) were the best predictors of change in BCVA; CST at baseline was a predictor of change in CST, but not absolute CST.

“The algorithm was very good at predicting absolute visual acuity six months after switching over to PRN treatment, as well as change in visual acuity,” Dr. Modi says. “It was less reliable at predicting the absolute central subfield thickness, however.”

Translating Machine Learning to the Clinic

Algorithms, while very good at diagnosing the stages of diabetic retinopathy (DR), are currently not able to outperform humans in differential diagnosis, Dr. Modi says. Yet with the sheer number of individuals who need to be screened for DR nationwide, there is value in applying assistive algorithms.

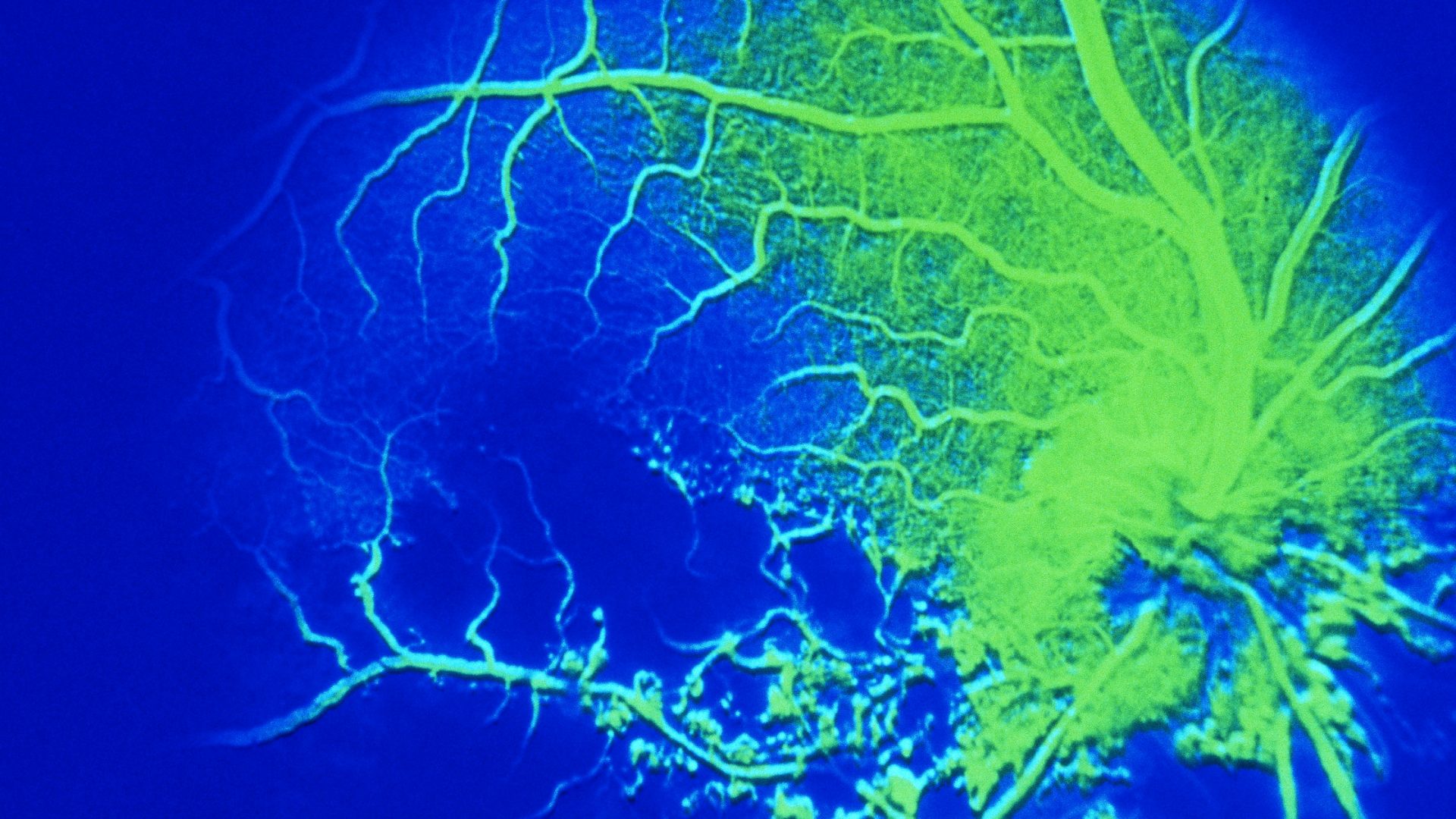

NYU Langone has a remote DR screening program—a semi-automated camera in the field takes an image, which is then sent to a retinal specialist who interprets it as mild, moderate, severe, or proliferative DR.

Algorithms don’t have that granularity just yet, Dr. Modi explains. “There are, however, already FDA-approved AI cameras that can decipher moderate diabetic retinopathy or worse. This binary branch point then triggers an alert to schedule an in-person visit.”

It’s easy to see the future as these algorithms get progressively better, he adds.

“What if in Walmart or CVS, for instance, patients could get a real-time diagnosis using an algorithm to read the image? If the algorithm detects a significant abnormality, you see a doctor right away. If not, you get screened annually.”

Augmenting the Algorithm with Optical Coherence Tomography

Moving forward, the team hopes to conduct a prospective study to test the CRVO algorithm, perhaps including optical coherence tomography (OCT) in a supervised fashion. “These are older studies; OCT images are much better now,” Dr. Modi says. He is interested in changes on OCT that might serve as predictive biomarkers.

“However, the more you annotate the image, the more you add your own bias when you’re developing an algorithm,” he notes. “It’s a delicate balance.”